Kyle E. Mathewson, PhD

Associate Professor, Faculty of Science - Psychology

Director, Attention Perception and Performance Lab (APPLab)

University of Alberta

Edmonton, AB

Email: kmathews@ualberta.ca

Phone: (780) 492-2662

Office: P-455 Bio Science - Psychology Wing

Kyle E. Mathewson, PhD

Complete CV

UAlberta Directory

Lab Website

@kylemath

Publications

Lectures

@mathkyle

@kylemath

Network

Album

I am an Associate Professor of Psychology in the Faculty of Science's Department of Psychology at the University of Alberta. I was previously a Postdoctoral Fellow at the Beckman Institute at the University of Illinois at Urbana-Champaign and in the Department of Psychology at the University of Alberta. I received my PhD in 2011 from the Brain and Cognition Division of the Department of Psychology at the University of Illinois in the Cognitive Neuroimaging Lab of Drs. Monica Fabiani and Gabriele Gratton, with the support of a Post Graduate Scholarship from the Natural Science and Engineering Research Council of Canada. I received my B.A. in Psychology (Honours; First in Graduating Class) from the University of Victoria in 2007, completing my honours thesis in the Learning and Cognitive Control Lab under the supervision of Dr. Clay Holroyd.

As Director of the Attention Perception and Performance Lab (APPLab) in the Department of Psychology at the University of Alberta, I lead research in cognitive neuroscience focusing on visual awareness, attention, learning, and memory. Our lab uses cutting-edge approaches combining human behavioral studies, neuroimaging, and electrophysiological recording. We're particularly focused on developing portable, accessible brain-sensing technologies and studying attention in real-world environments. Our work spans from basic research on neural oscillations to applied projects in areas like stroke diagnosis and sports performance.

Beyond academia, I engage in independent consulting work, applying neuroscience and cognitive psychology principles to real-world challenges. My expertise in portable brain-sensing technology and human performance optimization has led to collaborations with various industries and organizations.

Outside of my academic work, I'm actively involved in the Edmonton community, particularly in youth sports development. I serve as a soccer coach for the Juventus Soccer Club, working with the 2013 Boys T3 team. I'm also an active player myself in the Edmonton and District Soccer Association, combining my passion for sports with community engagement.

I share my life with my wife, Dr. Claire Scavuzzo, who is also at the University of Alberta. Together, we balance our academic careers with family life and various projects. Our children are actively involved in technology and sports - my son is developing his coding skills (visible on his GitHub) and shares his interests through his YouTube channel, while also playing soccer with the Juventus club.

This combination of academic research, industry application, and community involvement allows me to pursue my goal of making neuroscience more accessible and applicable to everyday life, while maintaining a rich and balanced personal life.

Mobile-optimized "app arcade" catalogue featuring all projects with structured entries. Scroll through portrait cards that scale from 2 columns on mobile to 8+ on ultra-wide displays. Each card expands on hover and loads from structured data for future React/Vite experiences.

Standalone experiences including EEGEdu, Juventus club site, and other lab or community projects.

Interactive web applications and digital experiences developed using modern web technologies including JavaScript, P5.js, WebGL, and Web APIs.

Feature-length essays, zines, and interactive writing experiments from dedicated repositories.

Essays and extended analyses exploring the intersection of science, society, and innovation.

Our foundational work investigates the neural mechanisms underlying conscious visual perception. Through pioneering studies of alpha oscillations (8-12 Hz brain rhythms), we discovered that consciousness operates not as a continuous stream but as discrete pulses—what we call "pulsed inhibition." This work reveals how brain rhythms gate access to awareness, explaining why we sometimes see and sometimes miss the same stimulus.

Our most-cited research established that the phase and power of alpha oscillations predict whether we perceive visual stimuli. By entraining these rhythms with flickering lights, we can create predictable fluctuations in awareness—demonstrating causal control over consciousness itself. This work has fundamentally shaped our understanding of attention, perception, and the neural basis of subjective experience.

Key papers:

Related studies:

Related projects:

Building on these foundational discoveries, the Attention Perception and Performance Lab follows a strategic four-stage approach to democratize neuroscience through pervasive, accessible brain sensing technology. Our goal: develop robust, portable systems that can help everyone understand and optimize their cognitive performance in real-world environments.

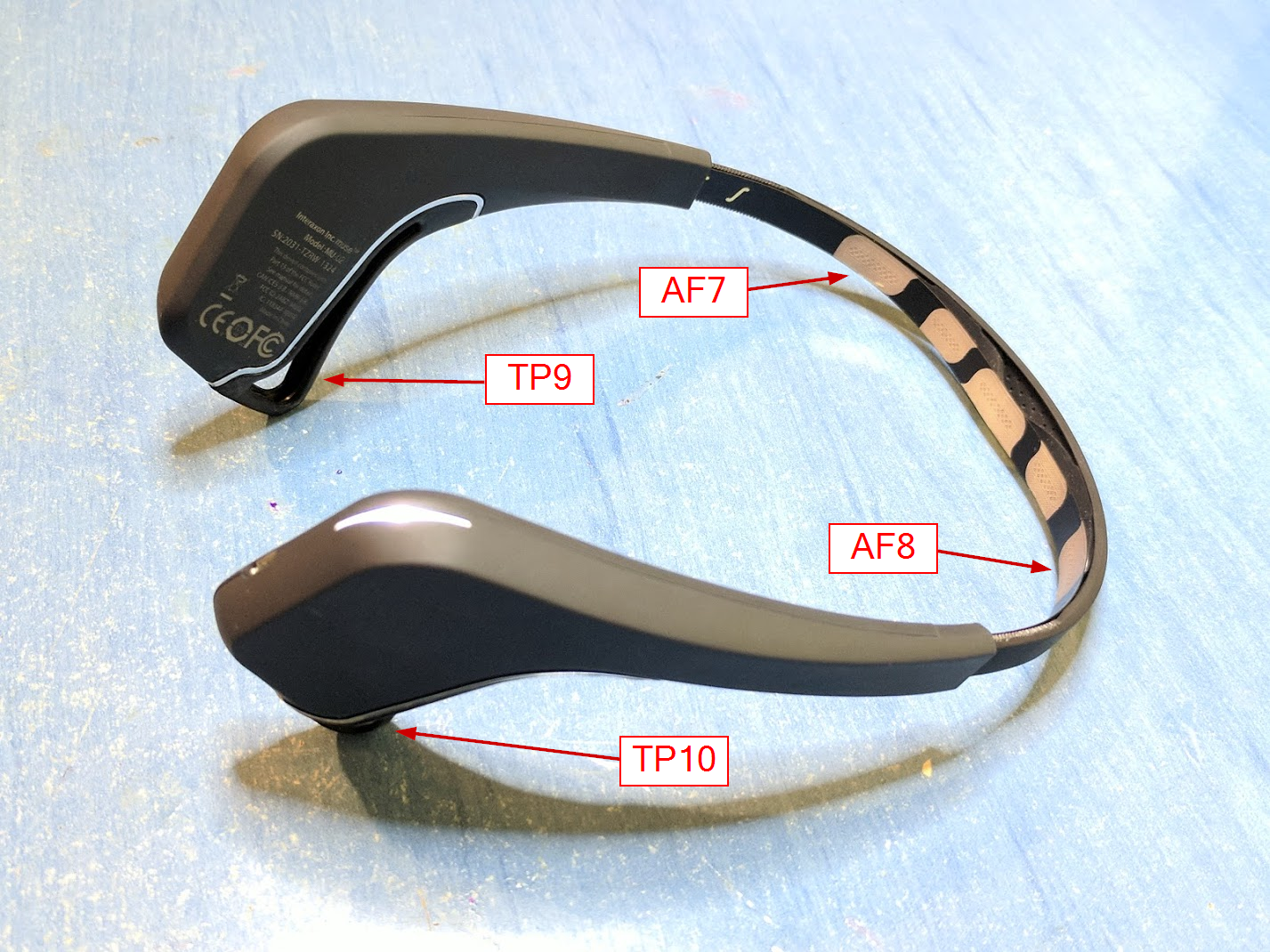

We engineer portable, high-quality neural recording systems that work beyond traditional lab constraints. Our portable EEG platforms enable 3-minute stroke diagnosis, while projects like flexible neural interfaces and MRI-compatible epidermal electronics push toward seamless, comfortable brain monitoring. Future directions: Developing ultra-miniaturized, wireless neural dust and breathable electronic tattoos for continuous, imperceptible brain monitoring.

Our collaboration with materials scientists has pioneered the next generation of wearable neural interfaces. These ultra-thin, breathable electronic systems conform to skin like temporary tattoos while maintaining research-grade signal quality. Unlike rigid electrodes, they enable comfortable, long-term monitoring compatible with advanced imaging (MRI) and real-world activities.

Key papers:

Related imaging research:

Related projects:

We create open-source software infrastructure that makes brain recording as simple as using a smartphone. Tools like muse-js (Web Bluetooth EEG), EEGEdu (interactive brain playground), and comprehensive analysis libraries (DeepEEG, fooof, pyoptical) democratize access to neurotechnology. Future directions: Real-time AI-powered brain state decoding and personalized cognitive optimization recommendations through everyday devices.

We validate neuroscience findings in natural environments—measuring brain activity during cycling through traffic, skateboarding, basketball shooting, and exploring how environmental factors modulate neural responses. This work reveals which lab findings translate to real life and which require revision. Future directions: Large-scale ecological studies using crowd-sourced brain data to understand cognitive performance across diverse populations and environments.

Related papers:

Related projects:

We deploy validated technologies across four domains: Research (open-source tools, reproducible workflows), Education (interactive learning platforms, programming courses), Consumer (creative brain-art interfaces, physiological monitoring), and Clinical (emergency stroke assessment, post-stroke rehabilitation). Projects span from Indigenous language preservation to creative fabrication. Future directions: Personalized cognitive enhancement, predictive mental health monitoring, and brain-responsive smart environments.

Related papers:

Related projects:

Beyond clinical and research applications, we explore how brain sensing can enable new forms of creative expression and human-computer interaction. These projects transform neural activity into art, music, and interactive experiences—making the invisible visible and creating intuitive interfaces between mind and machine.

Our creative projects use real-time brain activity to control generative art, navigate AI-generated imagery, and create responsive audiovisual experiences. These tools democratize neurotechnology by making it playful, engaging, and accessible to artists, educators, and the public.

Interactive brain-art platforms:

Visual perception & illusions:

Audiovisual experiences:

Physiological computing:

Related papers:

We develop tools that bridge digital design with physical creation, enabling novel forms of expression and personalized manufacturing. From voice-controlled 3D printing to color-mixing algorithms, these projects explore how computational creativity can enhance human imagination.

3D printing & fabrication:

Visual & generative tools:

Community & cultural projects:

Our newest work explores whether machines can understand and generate visual illusions, opening pathways to AI-human cognitive collaboration. We're developing computational methods that combine multivariate pattern analysis with web technologies to create the next generation of brain-computer interfaces that enhance rather than replace human cognition.

Related papers:

Related projects:

View all publications on Google Scholar

Last updated: January 2025 | Office: P-455 Bio Science - Psychology Wing | Contact